Unlocking the Power of Generative AI: From Core Concepts to Real-World Applications

Generative AI has rapidly captured global interest, but what exactly is it, and how is it transforming the way we work and interact with technology?

The introductory session of our June 2025 Applied AI (XD131) course led by Bryan Pon and Greg Maly of Exchange Design provided a foundational understanding of this rapidly evolving field and introduced key challenges to be explored further in the course.

Slide from XD131 presentation: Generative AI in context

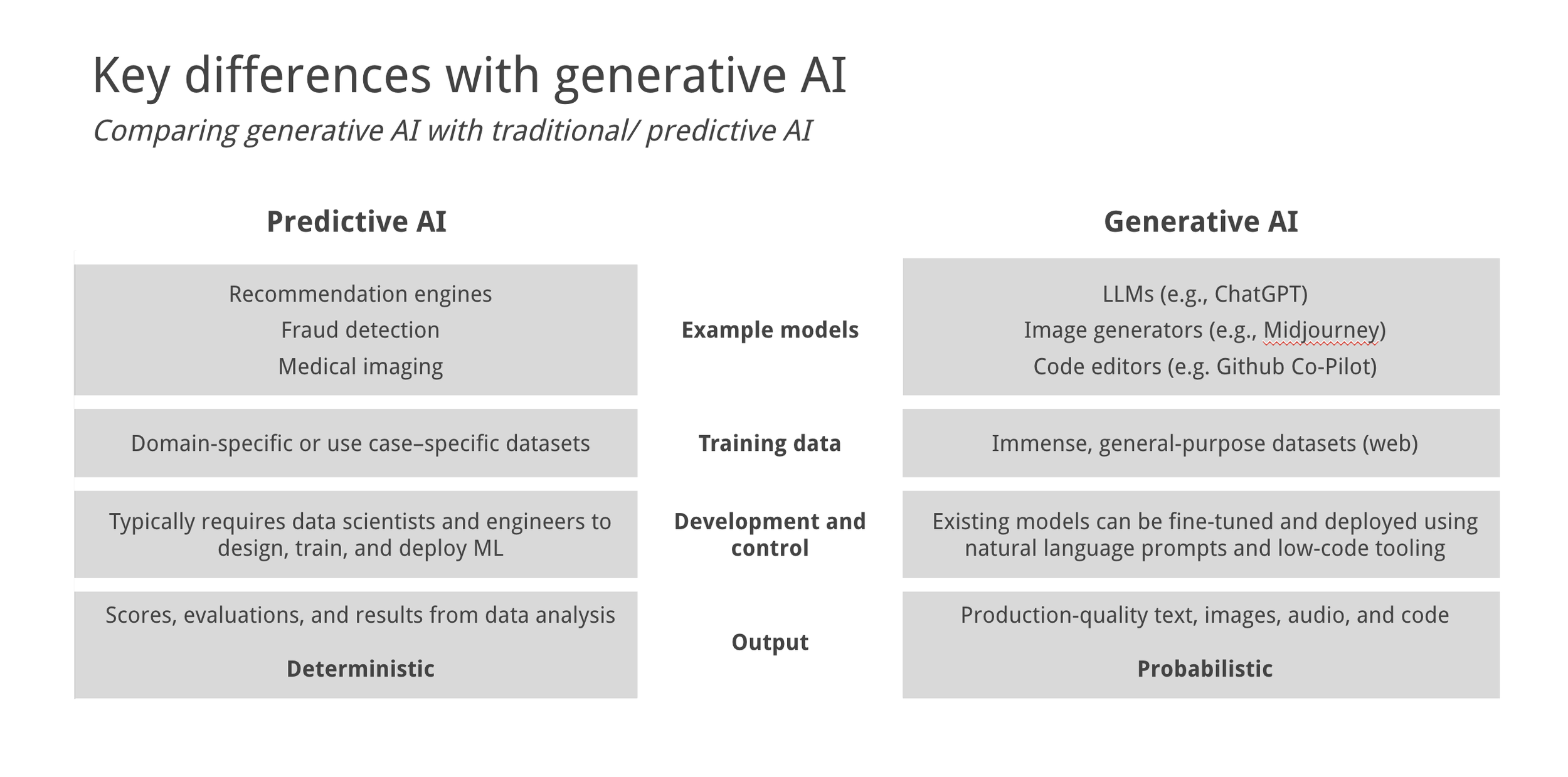

Generative AI vs. Predictive AI: A Fundamental Shift

While AI innovations have been ongoing for decades, generative AI represents a significant leap forward. Key distinctions from traditional predictive AI for example:

Predictive AI (e.g., recommendation engines, fraud detection) relies on domain-specific training data, is typically developed by data scientists, and produces deterministic outputs (same input, same output).

Generative AI (e.g., ChatGPT, Midjourney) is trained on massive, diverse datasets (often the open public web), can be controlled with natural language prompts, allows for broader user involvement in deployment, and generates probabilistic outputs like text, images, audio, and code. This probabilistic nature means outputs may vary even with the same inputs.

Slide from XD131 presentation: Key differences with generative AI

The Large Language Model (LLM) Lifecycle Explained

At the heart of many generative AI applications are Large Language Models (LLMs). While each is different, they tend to share a similar lifecycle:

Pre-training: Models ingest huge amounts of data to learn patterns and calculate probabilities.

Fine-tuning (Optional): A built model can be optimized for specific use cases by feeding it more domain-specific, high-quality data and instructions.

Prompting: This is the input query or instructions users give to the model.

Inference: The phase where the model calculates probabilities to generate a response based on available data.

The data available to an LLM significantly influences its responses. This includes its original training data (a snapshot in time), the ability to search the open internet for current information, and custom user-provided data (e.g., internal policies, reports) often accessed via Retrieval Augmented Generation (RAG).

Slide from XD131 presentation: LLMs basic setup and function

A Growing AI Ecosystem

The AI landscape is expanding beyond just core models. Discussing AI applications requires understanding the specific tools and services being used. This includes direct web application access (like ChatGPT), LLM integration into third-party apps via APIs (e.g., Notion, Microsoft Word), and platforms (like OpenAI's platform) that allow building custom applications with features like agent workflows and user authentication.

Core Capabilities of Generative AI

Generative AI excels at several key functions:

Search and Synthesize: Locating and summarizing information, often through semantic search.

Analyze and Classify: Evaluating structured and unstructured data based on instructions, such as reviewing applications or tagging text.

Generate and Transform: Creating and localizing various content types (text, images, code).

Guide and Engage: Providing personalized experiences, recommendations, and assistance for complex tasks.

Practical Use Cases in Action

Introducing compelling examples of generative AI in practice:

Combining Language Models with Search (RAG): This approach uses a search engine to retrieve relevant, up-to-date information, which the LLM then uses to generate contextually rich answers. This is commonly seen in tools like Co-pilot and custom GPTs. For enterprise-wide RAG platforms, starting with smaller, more bounded use cases due to content curation limitations and the significant cost and effort involved in integration and ensuring role-level security across diverse data sources.

Machine Learning for Categorizing Information: LLMs can parse unstructured data (e.g., news reports) to extract key details (who, what, when, where) and structure them into tables or visualizations. While LLMs can classify text without extensive training data, building a dedicated training dataset often leads to faster, cheaper, and more accurate results, though it requires more upfront time. Tools like Elicit and GPT for Sheets can assist with tagging data.

Organizational Deployment: Generative AI is being deployed broadly in two areas: internal operations (automating processes, improving productivity for cost reduction) and programming/delivery products (personalizing client experiences, supporting users for new or improved services). Internal operations often present more immediate "low-hanging fruit" opportunities.

Challenges exist, such as generating accurate infographics and visualizations. While direct image generation can be inconsistent, giving language models access to code interpreters and data (e.g., Python for plotting) can yield more accurate, though perhaps less visually polished, results.

Slide from XD131 presentation: The emerging AI stack

Introducing AI Agents and Orchestration: The Future of Automation

One of the most exciting areas of innovation is AI agents. An agent as an assistant that can manage processes autonomously with external tools. Using the analogy of a wedding planner (agent) versus a friend (LLM), an agent has specialized knowledge, access to tools (like florists and caterers), and the ability to act autonomously (e.g., make purchases, arrange logistics), going beyond simple back-and-forth interactions.

The components of an agent include:

Access to an LLM for interpretation.

External tools (e.g., Slack API).

Credentials and permissions.

Internal documentation.

Memory for multi-step processes.

Agents can enhance automation and capabilities beyond single model interactions, for instance, by autonomously monitoring a Slack channel for IT requests, searching documentation, and responding to users.

Slide from XD131 presentation: What’s an agent?

Orchestrating agentic workflows involves designing multi-step processes for agents. Greg Maly showcased n8n, a low-code automation platform, for building workflows to automate repetitive tasks like generating standardized briefing documents from reports by precisely extracting information. One compelling example of using agents is for RFP (Request for Proposal) responses, breaking down complex tasks (pulling capability statements, CVs, generating technical approaches, checking compliance, stylistic consistency) into manageable workflows. This approach is much more effective than trying to achieve such complexity with simple prompt engineering.

The combination of LLMs, natural language prompts, and low-code/no-code orchestration tools is a powerful enabler, especially for smaller organizations without extensive technical capacity. However, gaps exist within and between each stage of digital transformation.

This series features insights from our June 2025 Applied AI (XD131) course. Listen to the AI-generated podcast for an audio recap of this event.